Trials and Campaigns

Note about BayBE

Catalyst uses BayBE under the hood. BayBE may evolve over time. This page explains parameters in non-technical terms as they are used in Catalyst today. For the detailed technical reference that Catalyst is aligned with, see the BayBE 0.13.2

A trial is one completed run of your process: you choose parameter values, run the experiment, and record measured target results. BayBE uses those trials to recommend what to do next.

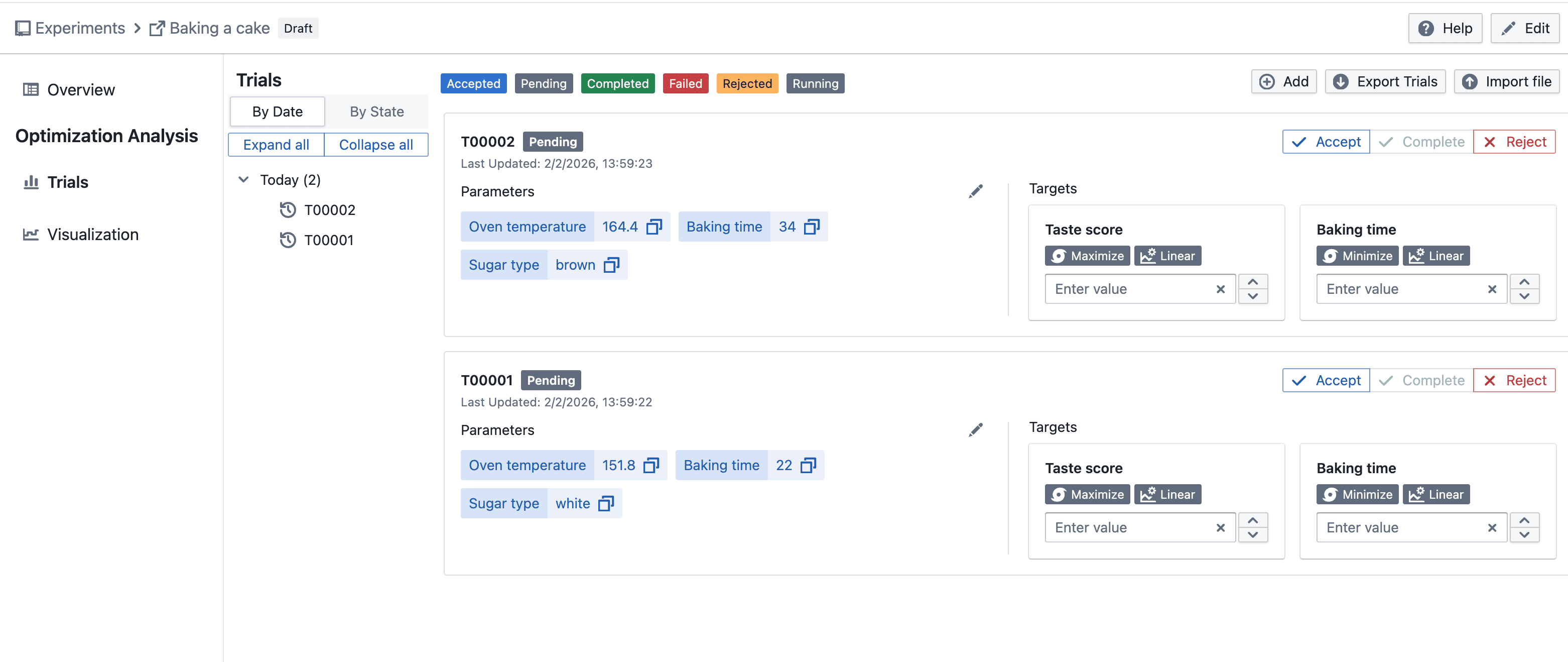

Where you see trials in Catalyst

Trials appear in two main places:

-

When creating an experiment

On the Create Experiment page, you define:- Targets (what you will measure per trial)

- Parameters (the knobs you can turn)

- Constraints (what is allowed)

This defines the BayBE campaign for that experiment. Once saved, you move on to running trials.

-

On the experiment detail page

When you open an existing experiment, you will see:-

A Trials section / tab listing all completed and pending trials

-

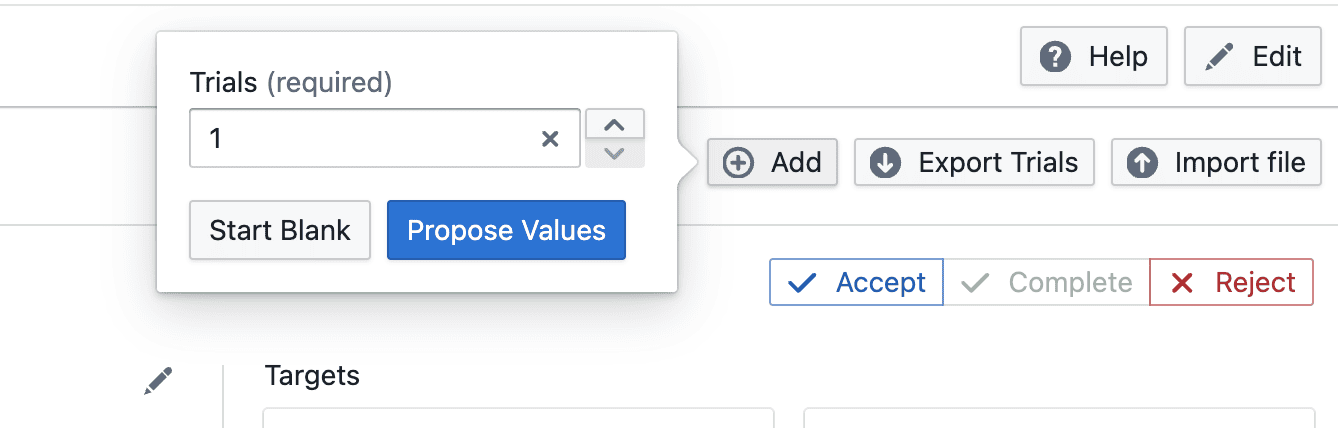

UI to create a blank or proposed values from BayBE for the next trial

-

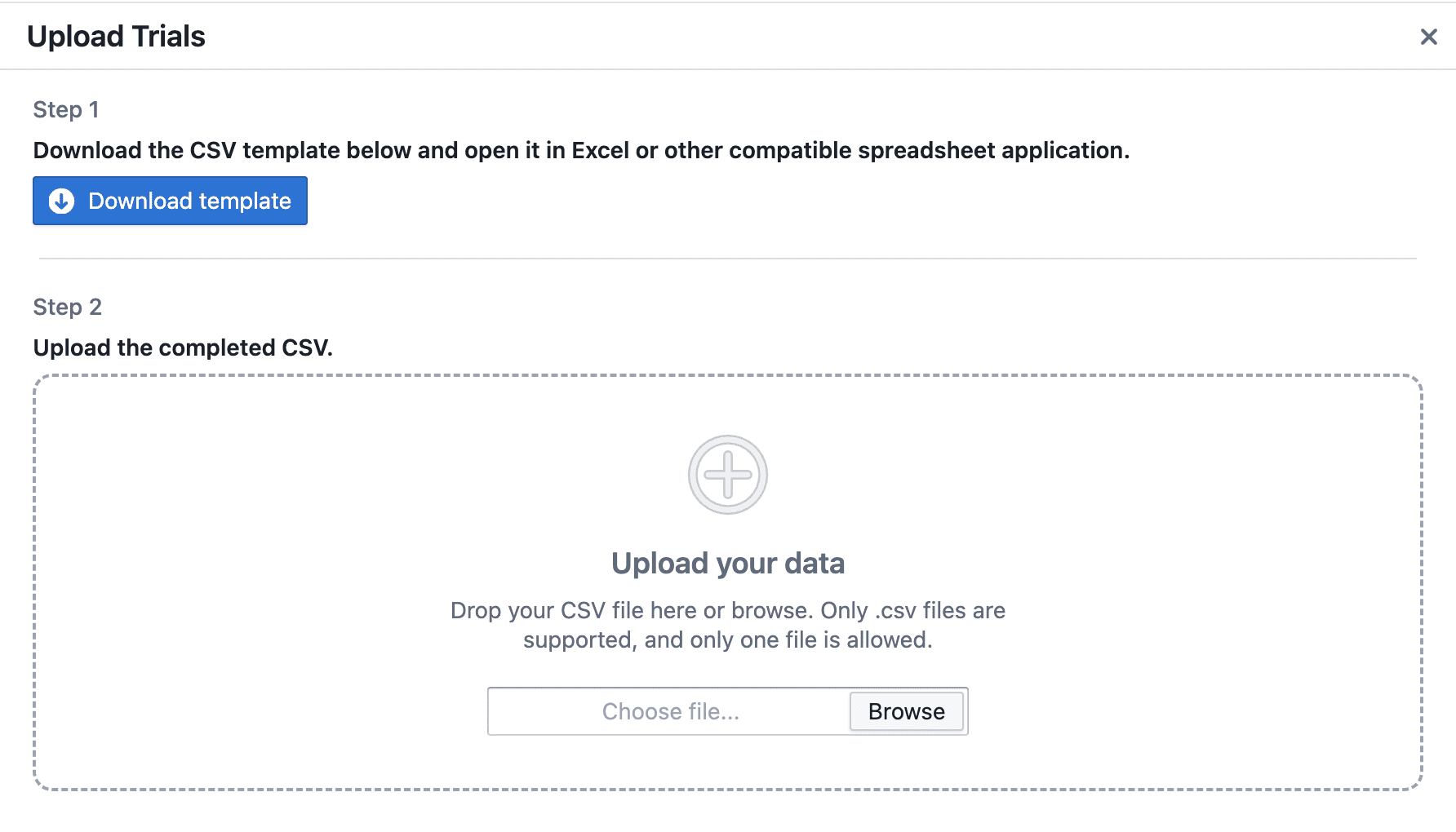

Able to upload existing trial data in batch (e.g., CSV upload)

This is where you actually add data to the campaign that BayBE will learn from.

-

What is a trial?

A trial contains:

- Parameter values used (inputs)

- Target measurements observed (outputs)

Think of each trial as one row in your experiment dataset.

Everyday analogy:

- For baking: one cake you bake with a specific oven temperature, baking time, and sugar type, then score for taste and texture.

In Catalyst, trials are created via:

- Single-trial forms (e.g., a "Create trial" button that opens a form or drawer)

- Batch upload (e.g., a CSV upload popover that creates multiple trials at once)

How recommendations improve over time

As you add trials, BayBE updates what it has learned and recommends settings that balance:

- Exploration – learn in areas with little data

- Exploitation – refine around the best observed results

In the UI this typically appears as a "Recommend next" or similar action in the Trials area (for example, a button or menu entry that creates a new trial pre-filled with suggested parameter values).

Every new completed trial makes the next recommendation a bit smarter, as BayBE incorporates the new data.

Data quality tips

Good trials data makes BayBE more effective:

- Record "bad" results too – BayBE learns from failures as well as successes.

- Keep units and naming consistent across trials (e.g., always use the same unit for temperature and time).

- Avoid changing the meaning of a parameter or target mid-experiment; if definitions change, consider starting a new experiment.

- If you run replicates, be consistent in how you store them (separate trials vs averaged result).

In the UI this usually means:

- Using consistent column names and units when uploading batches.

- Being careful when editing experiment configuration after trials already exist.

The algorithm works most efficiently when all suggested trials are completed before requesting a new set.

Only request the number of trials you actually intend to run.

You may also see recommendations that seem unintuitive or repeat combinations you already know perform poorly. These “bad” results are still valuable — they help the algorithm learn faster and converge more quickly toward the optimum. (Trust the process.)

Trial status semantics

In BayBE, the status of a trial determines whether and how it influences learning and future recommendations.

Accepted

An Accepted trial is a valid experiment result.

- Included in optimization and learning.

- Contributes to learning regardless of outcome quality.

Accept trials that should inform future suggestions.

Completed

A Completed trial has finished and produced a result.

- Eligible to be accepted.

- Completion alone does not imply validity or usefulness.

Failed

A Failed trial did not produce a usable result due to a known issue (e.g. equipment failure or measurement error).

- Removed from measurements and not used for learning.

- Does not exclude the parameter combination.

- Similar parameters may still be suggested again.

Use Failed when the result does not reflect real system behavior.

Rejected

A Rejected trial is intentionally excluded by the user.

- Not used for learning.

- Still considered for exclusion, so similar parameter combinations are not recommended again.

Use Rejected to permanently avoid an invalid or undesirable region of the search space.

Summary

- Accepted → Valid, used for learning

- Completed → Finished, pending evaluation

- Failed → Excluded from measurements, may be retried

- Rejected → Excluded and actively avoided in future suggestions

Further reading (BayBE)

For users or data scientists who want the full technical reference:

- BayBE Campaigns: https://emdgroup.github.io/baybe/0.13.2/userguide/campaigns.html

- BayBE Getting Recommendations: https://emdgroup.github.io/baybe/0.13.2/userguide/getting_recommendations.html